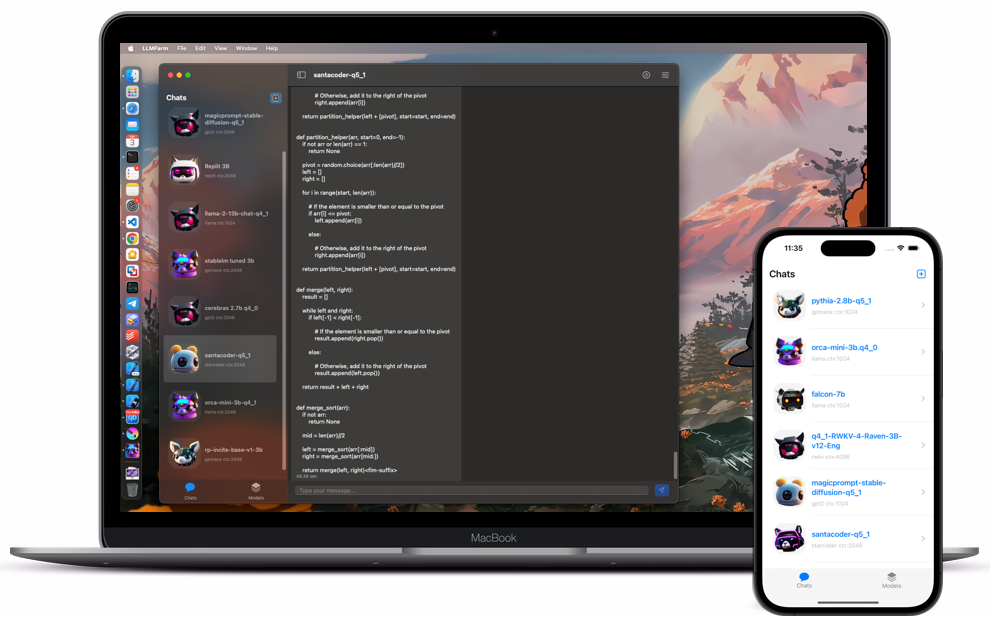

LLaMA and other LLM locally on iOS and MacOS.

Absolutely free, open source and private.

Absolutely free, open source and private.

LLM Farm provides all features absolutely free of charge! No hidden fees, subscriptions or feature limitations - all features are available for use at no additional cost.

View on github

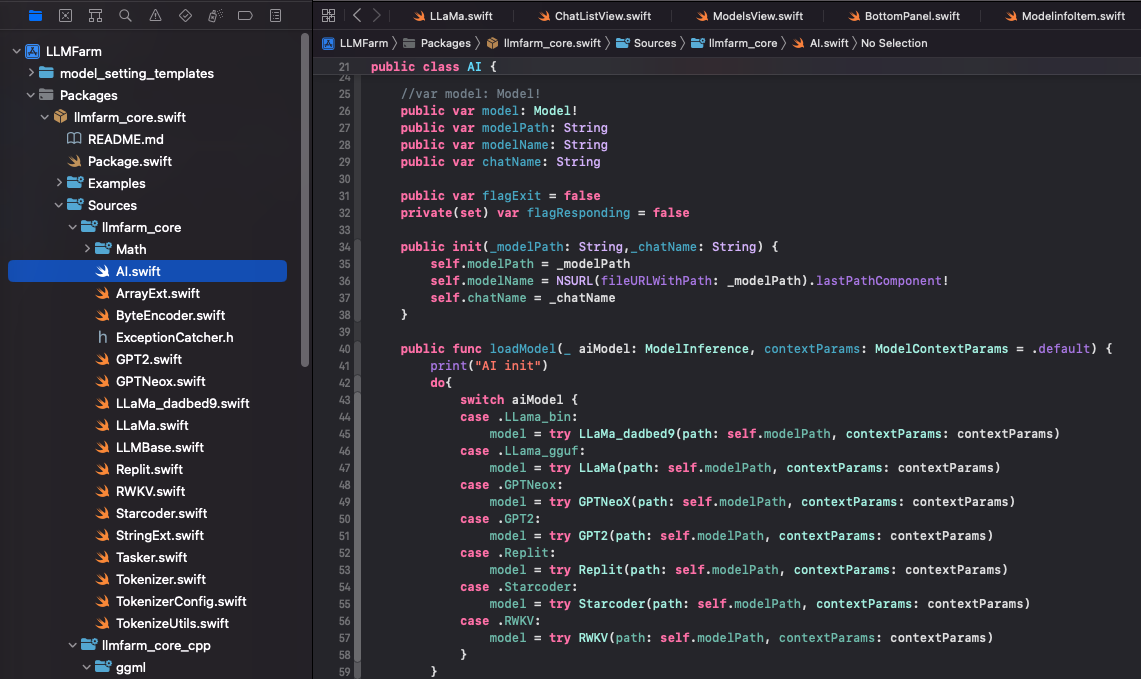

The core is a Swift library based on llama.cpp, ggml and other open source projects that allows you to perform various inferences. A class hierarchy has been developed that allows you to add your own inference.

View Core repo

You can download this models from huginfaces

Popular model from Meta, device with 8GB Ram required.

Popular model from Microsoft is a 3.8 billion-parameter, lightweight, state-of-the-art open model trained using the Phi-3 datasets

Gemma is a family of lightweight, state-of-the-art open models from Google, built from the same research and technology used to create the Gemini models.

MobileVLM is a competent multimodal vision language model (MMVLM) targeted to run on mobile devices.

This is a model, which are GPT-2 models intended to generate prompt texts for imaging AIs.

The TinyLlama project aims to pretrain a 1.1B Llama model on 3 trillion tokens.

Popular Chinese model